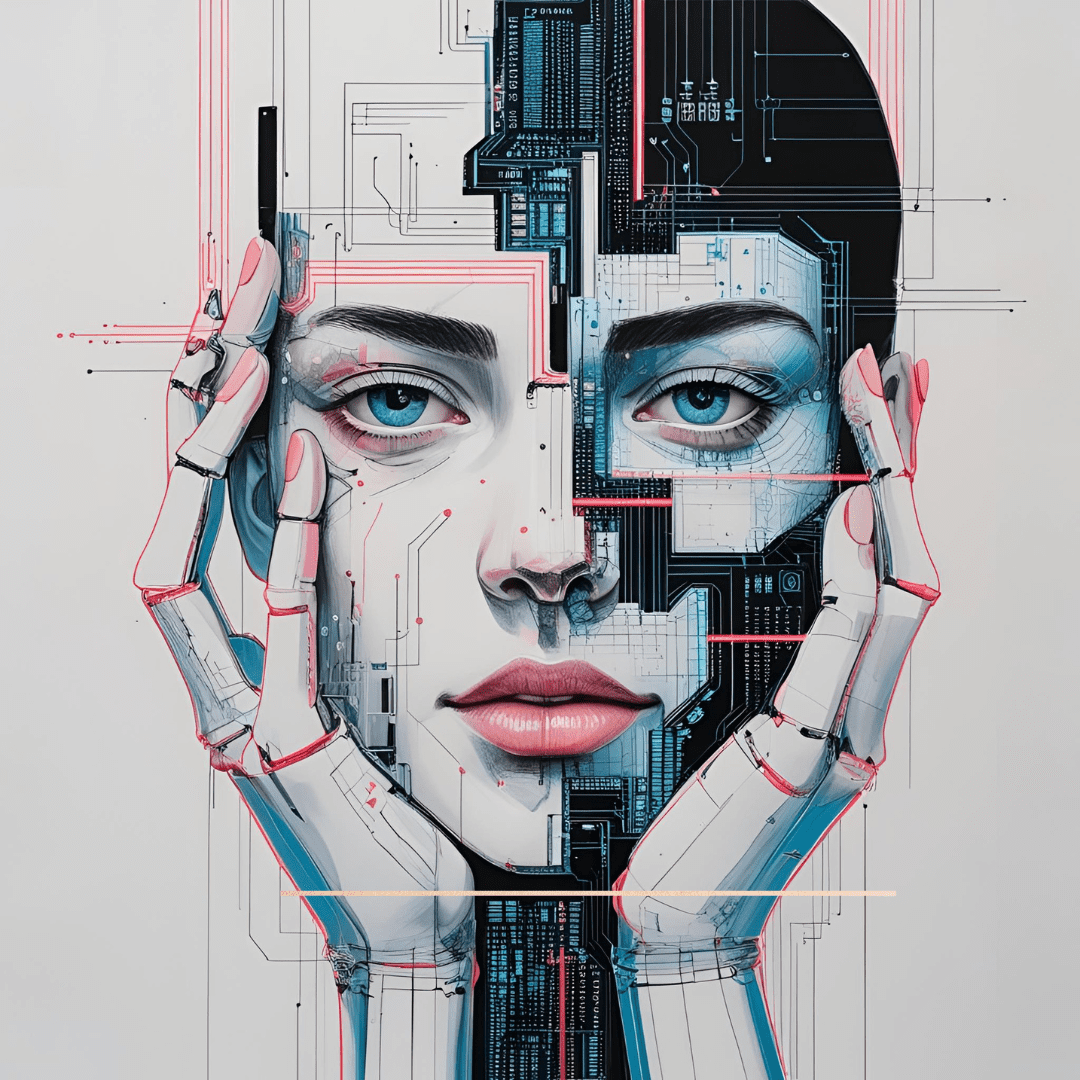

Artificial Intelligence (AI) is no longer just about numbers and logic. It’s now being used to understand and even recognize human emotions. AI tools analyze facial expressions, voice tone, and even written text to try to decode what we’re feeling. Despite major advances, achieving full accuracy in emotional detection remains a challenge. Let’s explore how AI works to identify emotions and what limitations this innovative technology still faces.

Facial Expression Analysis: How AI Reads the Human Face

One of the most common ways AI detects emotions is through facial expression analysis. Facial recognition technology uses cameras to track muscle movements in the face and map them to emotions like happiness, sadness, anger, and surprise.

These AI systems are trained using large databases of facial images that link muscle movement patterns to specific emotions. Over time, AI learns to recognize these patterns based on the data it receives. Companies like Affectiva and Realeyes develop tools that analyze facial expressions to understand how users feel. This technology is used in various sectors, including advertising, education, and mental health, to measure people’s reactions to products, videos, or situations.

However, AI still struggles with perfect accuracy. Emotions are complex and subtle, and how someone expresses them can vary significantly depending on culture, context, or personality. Facial expressions can also be easily faked or suppressed, making it difficult for AI to interpret them with full precision.

Voice Tone Analysis: Identifying Emotions Through Speech

Another method AI uses to detect human emotions is voice tone analysis. AI systems that specialize in speech recognition can identify shifts in pitch, volume, and speed to detect emotions like anger, happiness, sadness, or anxiety.

For example, when someone speaks quickly and with a high pitch, the AI might detect signs of anger or nervousness. A softer, slower tone might be interpreted as sadness or calmness. Companies like Beyond Verbal and Cogito are developing technologies that analyze the human voice to better understand the speaker’s emotional state.

Although voice analysis is a major step forward in emotion detection, it has notable limitations. Voice tone can be affected by external factors like background noise or physical conditions such as a sore throat. This means AI can’t always tell the difference between a real emotion and other influences.

Text Sentiment Analysis: Finding Emotions in Written Words

Text analysis has become one of the newest ways AI is used to detect emotion. Known as sentiment analysis, these AI systems scan written content to identify the emotional tone behind it. This can be applied to short texts like social media posts, emails, or product reviews.

These systems evaluate the words, phrases, and structure of the text to determine if the tone is positive, negative, or neutral. AI can also analyze word choice and writing style to infer emotional states. For example, words like “happy,” “excited,” and “grateful” may suggest positive emotions, while “sad,” “angry,” or “hopeless” can indicate negative ones.

However, this method also has its flaws. Written language can be ambiguous, and people often use sarcasm or humor, which makes it difficult for AI to distinguish the true feeling. Human emotions are complex and may be expressed in subtle ways that text alone can’t fully capture.

The Challenges of Emotion AI

Despite impressive progress, AI still faces several challenges in accurately detecting human emotions. As mentioned, facial expressions can be manipulated, voice tone can be affected by outside factors, and text can be interpreted in different ways. AI also struggles to detect complex or mixed emotions, such as when a person feels confused or emotionally conflicted.

Another major issue is the highly individual and cultural nature of emotions. What one person considers a sign of joy may look very different to someone from another culture or background. As a result, AI may work better in some settings and less accurately in others. The lack of a universal, standardized way to express emotions presents a major obstacle for developing emotionally intelligent systems.

The Future of Emotion Recognition Technology

Even with current limitations, AI is advancing rapidly, and new possibilities are emerging. With more advanced algorithms and richer, more diverse datasets, future AI systems are expected to become more accurate at detecting human emotions. The integration of multimodal data—combining facial, vocal, and textual analysis—could lead to a more complete and reliable understanding of emotional states.

As AI continues to improve, it may be used in more ethical and human-centered ways, considering context and emotional complexity. Integrating emotion recognition into fields such as mental health, customer service, and marketing could offer significant benefits by improving emotional understanding and support. However, it’s critical that these technologies be developed with strong ethical guidelines, ensuring privacy and preventing misuse of emotional data. Building emotionally intelligent AI requires a multidisciplinary approach that includes computer science, psychology, neuroscience, and ethics to ensure this technology serves society responsibly and effectively.

In recent years, artificial intelligence (AI) has increasingly been used to understand and even identify human emotions. AI tools are capable of analyzing facial expressions, tone of voice, and even writing to try to understand how a person is feeling. Although technology has advanced significantly, there are still challenges in achieving complete accuracy in reading these emotions. Let’s explore how AI works to identify feelings and what the limitations of this technology are.

How Artificial Intelligence Identifies Emotions Through Facial Expressions

One of the most common ways AI identifies human emotions is by analyzing facial expressions. Facial recognition technology uses cameras to capture the movements of facial muscles and map them to different expressions such as happiness, sadness, anger, and surprise.

These AI systems have databases with thousands of facial images that associate muscle movement patterns with specific emotions. Over time, AI learns to identify these expressions based on the data provided. Companies like Affectiva and Realeyes develop solutions that analyze facial expressions to understand how the user is feeling. This technology is used across various sectors, such as advertising, education, and even mental health, to measure people’s reactions to products, videos, or situations.

Despite the advancements, AI still faces challenges in accuracy. Emotions can be complex and subtle, and the way a person expresses feelings can vary depending on culture, context, or even personal characteristics. Additionally, facial expressions can be easily manipulated, making it difficult for AI to interpret emotions with total precision.

AI and Tone of Voice Analysis: An Auditory Approach to Emotion Identification

Another approach AI uses to identify human emotions is through tone of voice analysis. AI systems specialized in speech recognition can detect changes in tone, volume, and speed of speech to identify feelings such as anger, happiness, sadness, or anxiety.

For example, when someone speaks in a high and fast tone, AI may detect signs of anger or nervousness. On the other hand, a softer and slower voice may be associated with sadness or relaxation. Companies like Beyond Verbal and Cogito work with technologies that analyze the human voice to better understand the emotional state of the speaker.

Although tone of voice analysis has been a significant advancement in emotion detection, it still has limitations. Tone of voice can be affected by many external factors, such as the environment the person is in or the person’s physical health (such as a sore throat). Therefore, the AI system may not always be able to distinguish between a genuine emotion and other external influences.

How Text Analysis Can Also Reveal Emotions

Text analysis is one of the newer methods of using AI to identify emotions. AI systems, known as “sentiment analyzers,” examine written content to identify the underlying emotional tone. This can be done in short texts, such as social media posts, emails, or even product and service reviews.

These systems analyze words, phrases, and the structure of the text to determine whether the content has a positive, negative, or neutral tone. AIs can also analyze the choice of words and how the text is structured to make assumptions about the person’s emotional state. For example, words like “happy,” “excited,” and “grateful” can be associated with positive emotions, while terms like “sad,” “angry,” and “desperate” may indicate negative emotions.

However, this analysis is also not infallible. Writing can be ambiguous, and people may use sarcasm or humor, making it difficult for AI to distinguish between what is being said and the true feeling of the person. Additionally, human emotions are complex and can be expressed in subtle ways, making it harder for AI to accurately identify feelings through text.

Challenges and Limitations of AI in Emotion Recognition

Although artificial intelligence has made great strides in identifying human emotions, it still faces a number of challenges. As mentioned, facial expressions can be manipulated, tone of voice can be affected by external factors, and text can be interpreted in different ways. Additionally, AI may struggle to identify complex or mixed emotions, such as emotional ambiguity during moments of confusion or stress.

Another issue is that emotions are highly individual and cultural. What one person considers an expression of happiness may be different for another, depending on their culture or life experiences. Therefore, AI may be more effective in some contexts and less accurate in others.

The Future of AI in Emotion Recognition

Despite the current limitations, AI is rapidly evolving, and there are many possibilities for improvement. With the use of more advanced algorithms and the incorporation of richer and more varied data, it is likely that AI systems will become more accurate at identifying human emotions.

Furthermore, as AI technology continues to improve, it may be used in more ethical and sensitive ways, taking into account the context and complexity of human emotions. The integration of AI in areas such as mental health, customer service, and marketing could bring significant benefits by helping to improve the understanding and support of people’s emotional needs.